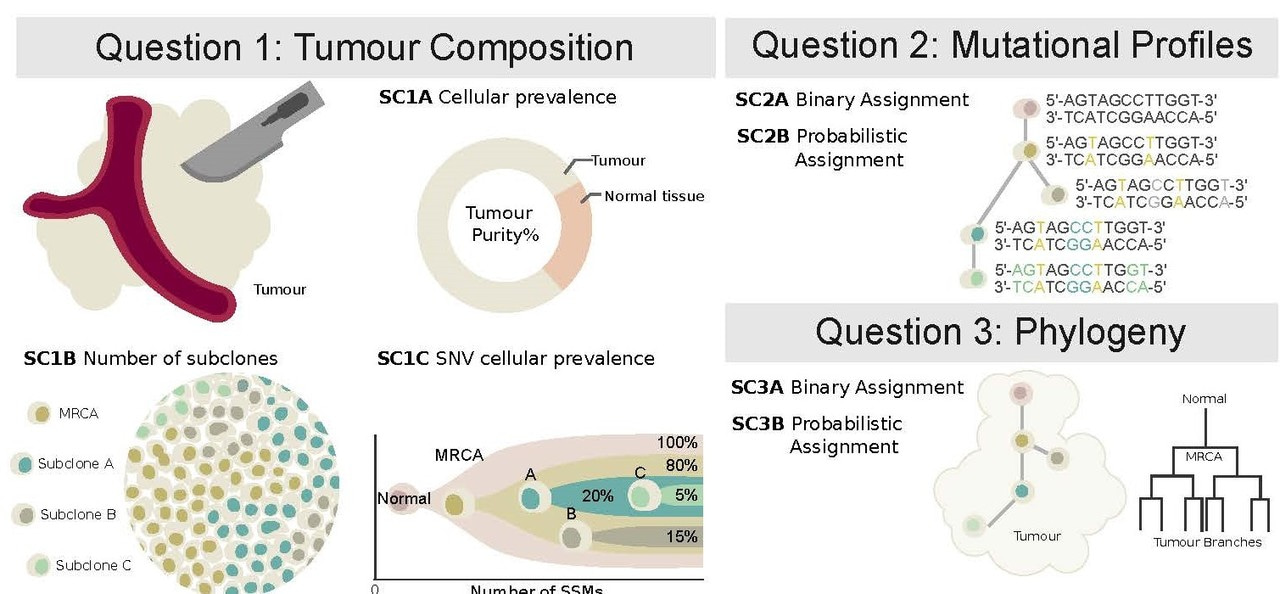

Cancer is a disease of evolution. A single normal cell slowly acquires more and more mutations, each giving it a little bit of an advantage over its neighbours. Eventually, that cell gains enough mutations and enough of an advantage that it has evolved into a full cancer. Similarly, through this process of evolutionary adaptation, cancers eventually develop resistance to most therapies. In a deep way, evolution is the heart of cancer biology. Subclonal reconstruction methods try to use information from tumour DNA sequencing studies to determine the specific evolutionary history of an individual cancer. A broad range of translational studies have started to unpack how evolutionary features of cancer are related to its clinical presentation. Surprisingly, though, there has not yet been a standardization or even a benchmarking of the ways in which evolutionary features of cancer should be described and estimated.

The reasons for this are many. Perhaps the most critical is that defining a gold standard -- what is truth, and how should deviations away from that truth be scored. Defining these features of subclonal reconstruction is the primary topic of our study: A community effort to create standards for tumour subclonal reconstruction. Perhaps the most difficult part of the study was defining the ground-truth in a reproducible, adaptable way. Our understanding of cancer continues to grow, so we needed a ground-truth that could improve over time and yet that was completely understood. This brought us to the idea of simulations.

Using simulations has several advantages over real data. Simulations are generally much cheaper than generating new experimental data. Because they can be custom designed, they can cover rare theoretical and corner cases that may be difficult to find in real data. They also provide a systematic way of evaluating true negatives, because the simulator explicitly designs in both positive and negative cases of any feature. By contrast our knowledge of negatives in real data is usually limited by the limits of detection and other technical considerations of the experimental design. On the other hand, simulations have drawbacks: complex problems require complex simulations, consuming time and resources to generate. Simulations are only as close to real data as our current understanding, and thus reflect our knowledge at a snapshot in time. Further, these assumptions or errors in the simulation process can lead to simulation-specific artifacts, and as a result analyses relying heavily upon simulated datasets can be overfit to features that have no generalizability to real data. Overall, simulations have a key place in benchmarking.

There are several ways to simulate cancer genomics data, largely discriminated by how close to the raw data the simulations are performed. Simulating read-level sequencing data (e.g. BAM files) is the lowest-level of simulation in wide-use today. Tools like BAMSurgeon

We chose to use read-level simulations for our work, and that required a broad range of new features to be added to existing simulation strategies. For example, we needed to ensure correct allele-specific phasing, complex genomic rearrangements and trinucleotide mutational signatures were appropriately incorporated. But most critically, simulating BAM files with arbitrary tumour evolutionary histories and mutation landscapes required phasing reads in parental sets, accounting for allele-specific somatic copy number aberrations occurring at different evolutionary times. This required us to create a complex read-counting and -assignment pipelines to ensure changes were correctly timed and accounted for in the final simulated BAM file.

Creating this read-accounting system turned out to be the single biggest challenge of this study. We encountered issues at many different steps of the workflow, ranging from conceptual discoveries and software challenges. First, as an international collaboration different parts of the analyses were performed in different labs in different countries. For example, simulator development and subclonal copy number calling were performed across Europe, North America and Australia. To facilitate this, we had to develop ways to rapidly share data, development code and output files at scale. Given the large dataset sizes, finding ways to work across multiple HPC and cloud-systems was critical to optimizing our development-testing cycles. We also needed to work hard on portability, ultimately moving the parts of the copy number assessment requiring BAM files to the same computing center where simulations were done.

But a much longer-running source of fun for the research team was the long-running identification of unexpected features (AKA bugs) in the read counting and book-keeping algorithms needed to simulate accurate copy number profiles. Errors ranged from inaccuracies in purity estimates (the estimated fraction of cancer cells), wrong balance of allelic copy number, improper handling of subclonal whole-chromosome and whole-genome events and handling of the sex chromosomes. In retrospect, we significantly underestimated the times needed to develop an accurate simulator, and mid-stream needed to redesign our simulation software to be significantly more robust: partitioning subclonal BAMs for each allele copy early in the pipeline and spike mutations in each independently before merging them rather than subsampling after mutation spike-ins. This greatly increased the space-demands on the pipeline, but made it easier to incorporate changes and to recover from the inevitable crashes of compute nodes. This allowed changes, like simulating the pseudoautosomal region on X and Y independently from the rest of the chromosome, to occur rapidly and without incurring the large-scale compute costs of re-simulating non-sex chromosome data. Similarly, accurately simulating chronological ordering while maintaining the desired subclonal mutation profiles, particularly when combining regions of copy number changes and arbitrary spatial variability across the genome (e.g. replication timing) required many iterations of analysis.

We would love to say that we identified all simulation challenges up-front through our rigorous testing. But we didn’t: input from participants helped us find issues after we released an initial set of simulations. Solving them required the diverse expertise of everyone on the team along with thorough and systematic quality control throughout the development process. We presented and solved these issues as they arose on weekly joint intercontinental calls, during which we hypothesized sources of errors and designed relevant test cases. Between calls, we developed the code and tested our hypotheses. We estimate that we thought we had a “finished” simulator and set of tumours for analysis six times prior to the actual locked dataset in this study.

In our view, this speaks to the idea that a simulator is really a living piece of software, continually evolving to incorporate new aspects of cancer biology, and to fix the inevitable bugs or developments in genomics technology. It’s a deeply multi-disciplinary activity, linking software engineering to computational biology to downstream cancer biology interpretation. In this light, it might actually be a benefit for the research community to coalesce to a smaller number of simulators, each receiving intensive R&D activity so that they evolve in-pace with the rest of our community. Although the path to developing a subclonally-aware read-level simulator was complex, we believe it will be a useful addition to the research community, far beyond the specific characterizations about subclonal reconstruction we have used it for to date!

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in