Nowadays, deep learning, especially deep neural networks (DNNs), has had stunning successes in image reconstruction and denoising1-3. In most applications, DNNs learn the end-to-end image transformation relationship from large amount of exemplary data without the need of explicit analytical models. Moreover, the data-driven inversion scheme is able to approximate not only the pseudo-inverse function of the image degradation process, but also the stochastic characteristics of the “good” solutions. Hence, deep learning algorithms typically offer wider applicability and better solution for the inference tasks, e.g., image super-resolution (SR) and denoising, than the analytical ones. For instance, properly trained DNNs have been demonstrated to restore biology structures from low SNR images4, 5, and even infer the SR image from single or multiple diffraction limited images6-9, etc.

Challenges of Deep Learning in Microscopic Image Processing

Nevertheless, deep learning based computational approaches also poses substantial challenges and limits10. First, image transformation is essentially an ill-posed problem. It means that although deep learning models leverage large amount of exemplary data to learn statistically good end-to-end mapping relationship of image transformation, there exists multiple solutions in the high dimensional space of all possible images that correspond to the same input10, 11. Second, DNNs generally are subjected to a learning bias towards low-frequency patterns (i.e., simple or coarse structures), termed as spectral bias12. The spectral bias effect accounts for the resolution degradation of the network inference via deep-learning super-resolution (DLSR) models relative to the resolution of the ground truth (GT) images7. Third, DNN models are subject to epistemic uncertainty (or called model uncertainty)13 that is related to the training data and network architecture. It reflects that to what extent the information conveyed from restored images is reliable. Fourth, the training of DNNs requires paired images of inputs and corresponding high-quality ground truths. However, collecting the high-quality GT data, especially in the field of fluorescence bioimaging, is extremely laborious and sometimes even impractical owing to the high dynamics of living biological specimens14, 15. These challenges deteriorate the fidelity and quantifiability of DLSR images, and impede the wide applications of deep learning in microscopy.

Prior-knowledges to Rationalize Implementations of DNNs

In general, being independent of explicit analytical models and prior-knowledges is recognized as the most prominent characteristic of deep learning algorithms. However, recent studies show that incorporating additional information into DNN models is beneficial in the tasks of image transformation. For instance, the structural supplements from diffraction-limited images were used to improve reconstruction of high-quality PALM/STORM images from largely under-sampled localization data16, and the power spectrum coverage of the feature maps were a useful indicator to enhance the DNNs’ learning capability of high-frequency feature representation in image super-resolution tasks7. Inspired by these studies, we devised a DNN architecture that can integrate the intrinsic physical model of specific microscopy into the network to guide/rationalize the training and inference processes. In our opinion, there are three general aspects describing an imaging process: the physical characteristic of the optical setup, e.g., the illumination patterns and the optical transfer function (OTF); the characteristic of the specimens, e.g., sparsity and spatial continuity of fluorescent specimens; and the characteristic of the data, e.g., the temporal continuity of the movement of the specimen. All these properties potentially could be integrated into a DNN to rationalize its output, so that we termed this concept as rationalized deep learning (rDL).

Rationalized Deep Learning Structured Illumination Microscopy

Next, we took the task of SR reconstruction of structured illumination microscopy (SIM) images under low signal-to-noise ratio (SNR) conditions as our first attempt to implement rDL. Although SIM is often recognized as the best option for SR live-cell imaging, a long-standing shortcoming of SIM is that its post-reconstruction requests relatively high SNR for each raw image to produce high-quality SR-SIM images17, 18. To obtain high-quality SIM image under low SNR condition, we first decoupled this task into two subtasks: raw SIM image denoising and SR reconstruction.

This strategy theoretically alleviates the ill-posedness and reduces the burden of DNNs in that the network model do not need to predict the complicated high-frequency details. However, if we simply train a denoising model in the common end-to-end manner4, 5, such a model can hardly recognize the pattern information hidden in the noisy images nor reserve the delicate Moiré fringes, thus causing severe reconstruction artifacts in following SIM reconstruction procedures. Nevertheless, for specific SIM setups, the OTF and the physical model of image acquisition process are pre-determined. Inspired by this, we tried to conditionally feed these prior-knowledges into the network model in both training and inference phase via adopting pattern-modulated images, which were generated using a predicted SR image, pre-determined SIM pattern and system’s OTF, as the auxiliary input to DNNs, to rationally guiding the network training and inference (Fig. 1).

We surprisingly found that the proposed scheme performed well and overcome the afore-mentioned challenges of deep learning algorithms. First, the strategy of denoising raw images allows the network to work within the diffraction limited domain only, thus making it easier to learn high-frequency information which is down-modulated into raw SIM images, so as to greatly alleviate the spectral bias of current DLSR models. Second, the additional information of illumination patterns, which are independent of detection conditions, not only restricts the denoised raw images into lower dimensional space that lowers the uncertainty of network output, but also confines the denoised raw images to the physical model of SIM that guarantees high-quality reconstruction via the classic algorithm. Taken together, the proposed scheme essentially rationalizes the DNN’s training and inference with the physical model of SIM.

Generalization of Rationalized Deep Learning Concept

Besides SIM, the rDL scheme can be generalized to other imaging modalities. Here, instead of illustrating the usability of rDL scheme with any specific imaging technique, we discuss the possibility of integrating other general prior-knowledges, i.e., the OTF and spatiotemporal continuity, into the rDL scheme.

a) The capability of capturing specimens’ information of an imaging system can be described by its OTF and only the spatial frequency within the OTF can be collected by the imaging system, while the frequency components outside the OTF are totally contributed by various types of noise. Moreover, the transmission efficiency of the OTF usually decreases as the increasing of the spatial frequency19, suggesting that the SNR of high-frequency signal is typically lower than that of low-frequency signal. Therefore, the effective cut-off of the OTF in practical experiments is actually a variable parameter. Based on this, we devised the Fourier noise suppression module (FNSM) of applying a symmetric binary mask in Fourier domain with a trainable radius to adaptively adjust the boundary. The FNSM can be embedded into any denoising neural network models and provides a steady boost of denoising performance.

b) As mentioned before, acquiring high-quality GT data is difficult and sometimes impractical for dynamic biological specimens. However, in most cases of daily imaging experiments, a consecutive image series, i.e., time-lapse, or volumetric data, rather than a single image is acquired. Inspired by the Noise2Noise configuration20, we exploited the duplicity of information in consecutive noisy raw images to construct the temporally or spatially interleaved self-supervised learning mechanism (TiS/SiS-rDL) that can train a robust denoiser with the noisy data only. Moreover, we introduced an amending constraint to eliminate the non-zero expectation gap between two adjacent images used as input and GT in SiS-rDL, finally enabling the TiS/SiS-rDL models producing denoised results similar to those obtained with supervised models.

Notably, because the FNSM and TiS/SiS-rDL are independent with the imaging techniques and neural network architectures, they can be generally applied to improve the performance of various types of imaging systems, e.g., confocal microscopy, light-sheet microscopy, or two-photon fluorescence microscopy. The successful implementations of FNSM and TiS/SiS further extend the rDL concept and broaden its applications.

Epilogue

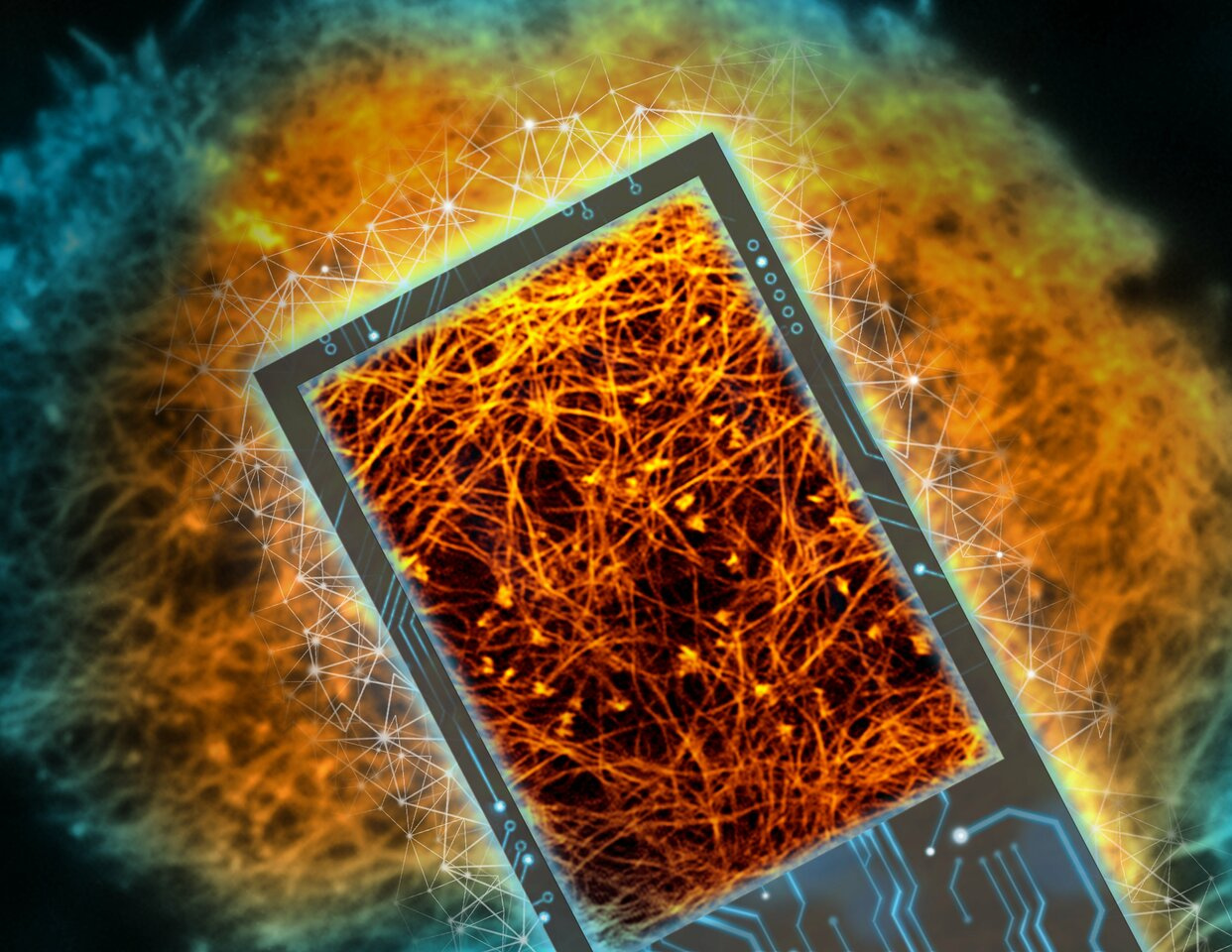

let’s come back to the question raised in the title of this article: how to make deep learning in microscopy more rational? Our answer is to integrate the physical model and other prior-knowledges of imaging into the design and execution of deep learning techniques, i.e., the rationalized deep learning. We believe that the rDL methodologies described and demonstrated here fill an unmet need for minimally invasive 2D/3D imaging of intracellular dynamics at ultra-high spatial and temporal resolution, high fidelity and quantifiability for long durations (Fig. 2), and its concept will inspire more further developments in the burgeoning field of deep learning-based microscopy.

References

- Tian, C. et al. Deep learning on image denoising: An overview. Neural Networks (2020).

- McCann, M.T., Jin, K.H. & Unser, M. Convolutional neural networks for inverse problems in imaging: A review. IEEE Signal Processing Magazine 34, 85-95 (2017).

- Yang, W. et al. Deep learning for single image super-resolution: A brief review. IEEE Transactions on Multimedia 21, 3106-3121 (2019).

- Weigert, M. et al. Content-aware image restoration: pushing the limits of fluorescence microscopy. Nat Methods 15, 1090-1097 (2018).

- Chen, J. et al. Three-dimensional residual channel attention networks denoise and sharpen fluorescence microscopy image volumes. Nature Methods 18, 678-687 (2021).

- Qiao, C. et al. 3D Structured Illumination Microscopy via Channel Attention Generative Adversarial Network. IEEE Journal of Selected Topics in Quantum Electronics 27, 1-11 (2021).

- Qiao, C. et al. Evaluation and development of deep neural networks for image super-resolution in optical microscopy. Nature Methods 18, 194-202 (2021).

- Jin, L. et al. Deep learning enables structured illumination microscopy with low light levels and enhanced speed. Nature Communications 11, 1934 (2020).

- Wang, H. et al. Deep learning enables cross-modality super-resolution in fluorescence microscopy. Nat Methods 16, 103-110 (2019).

- Belthangady, C. & Royer, L.A. Applications, promises, and pitfalls of deep learning for fluorescence image reconstruction. Nat Methods 16, 1215-1225 (2019).

- Lugmayr, A., Danelljan, M., Van Gool, L. & Timofte, R. in European Conference on Computer Vision 715-732 (Springer, 2020).

- Rahaman, N. et al. in International Conference on Machine Learning 5301-5310 (PMLR, 2019).

- Kendall, A. & Gal, Y. What Uncertainties Do We Need in Bayesian Deep Learning for Computer Vision? Advances in Neural Information Processing Systems 30, 5574-5584 (2017).

- Laine, R.F., Arganda-Carreras, I., Henriques, R. & Jacquemet, G. Avoiding a replication crisis in deep-learning-based bioimage analysis. Nature Methods 18, 1136-1144 (2021).

- Li, X. et al. Reinforcing neuron extraction and spike inference in calcium imaging using deep self-supervised denoising. Nature Methods 18, 1395-1400 (2021).

- Ouyang, W., Aristov, A., Lelek, M., Hao, X. & Zimmer, C. Deep learning massively accelerates super-resolution localization microscopy. Nat Biotechnol 36, 460-468 (2018).

- Ball, G. et al. SIMcheck: a toolbox for successful super-resolution structured illumination microscopy. Scientific reports 5, 1-12 (2015).

- Wu, Y. & Shroff, H. Faster, sharper, and deeper: structured illumination microscopy for biological imaging. Nature methods 15, 1011-1019 (2018).

- Li, D. et al. Extended-resolution structured illumination imaging of endocytic and cytoskeletal dynamics. Science 349, aab3500 (2015).

- Lehtinen, J. et al. Noise2noise: Learning image restoration without clean data. arXiv preprint arXiv:1803.04189 (2018).

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in