Humans and animals can predict what will happen in the future and act appropriately by inferring how the sensory inputs were generated from underlying hidden causes. The free-energy principle1,2 is a theory of the brain that can explain how these processes occur in a unified way. However, how the fundamental units of the brain, such as the neurons and synapses, implement this principle has yet to be fully established. In our paper, “Canonical neural networks perform active inference3,” we have mathematically shown that neural networks that minimise a cost function implicitly follow the free-energy principle and actively perform statistical inference. We have reconstructed a biologically plausible cost function for neural networks based on the equation of neural activity and shown that the reconstructed cost function is identical to variational free energy, which is the cost function of the free-energy principle. This equivalence speaks to the free-energy principle as a universal characterisation of neural networks, implying that even at the level of the neurons and synapses, the neural networks can autonomously infer the underlying causes from the observed data, just as a statistician would. The proposed theory will advance our understanding of the neuronal basis of the free-energy principle, leading to future applications in the early diagnosis and treatment of psychiatric disorders, and in the development of brain-inspired artificial intelligence that can learn like humans.

Background

The brain has been modelled using two major theoretical approaches. The first is the theory of dynamical systems, which focuses on the dynamics of brain activity. The brain is composed of many neurons, and the activity of the neurons and synapses can be written in the form of differential equations. In contrast, the brain can also be viewed as an agent that processes information, wherein analogies with knowledge accumulated in statistics and machine learning are used to understand the brain from an information processing perspective. However, these two approaches have been developed independently of each other, and a precise relationship between them has not yet been established. To address this issue, we developed a scheme to link a recent information theoretical view of the brain—the free-energy principle—with the neuronal and synaptic equations that have long been studied.

Briefly, the free-energy principle, proposed by Friston, states that the perception, learning, and action of biological organisms are determined to minimise variational free energy (an upper bound of sensory surprise), which in turn enables the organisms to adapt to the external world. In the example shown in Fig. 1, when the external world (the person) generates some signal, the agent (the dog) infers the hidden states or causes (feelings of the person) based only on directly observable sensory inputs and expresses the posterior expectation about the hidden states in the brain. The agent can perform (variational) Bayesian inference by updating the posterior expectation to minimise variational free energy. Furthermore, the agent can maximise the probability of obtaining the preferred sensory input (treat) by actively inferring4 the action that minimises the expected value of free energy in future.

Fig. 1. Schematic of the free-energy principle. Here, the external world (the person) generates sensory inputs (observation) from hidden states according to a generative model. The agent (the dog) performs active inference by updating posterior beliefs about hidden states, parameters, and actions to minimise variational free energy F. Policy is also updated by minimising expected free energy G. Figure is reproduced from a Review paper5. (CC BY 4.0)

Fig. 1. Schematic of the free-energy principle. Here, the external world (the person) generates sensory inputs (observation) from hidden states according to a generative model. The agent (the dog) performs active inference by updating posterior beliefs about hidden states, parameters, and actions to minimise variational free energy F. Policy is also updated by minimising expected free energy G. Figure is reproduced from a Review paper5. (CC BY 4.0)

In fact, it is a mathematical truism that an agent that minimises variational free energy can perform (variational) Bayesian inference and learning. However, whether this is biologically plausible as the corresponding brain mechanism is a separate issue. How the free-energy principle is implemented at the level of the neurons and synapses and what the neuronal bases underwrite the principle remain to be fully elucidated.

In contrast, some neuroscience theories have a more established correspondence with the neuronal basis. For example, although the brain itself is complex and understanding the mechanisms underpinning its functions are difficult, it is widely accepted that dynamical equations of neural activity and synaptic plasticity can successfully explain physiological phenomena. However, how these phenomena shape brain function remains to be fully understood. To address these issues, this work aimed to provide a mathematical demonstration of the validity of the free-energy principle by bridging the gap between the information-theoretical interpretation and neuronal and synaptic level phenomena.

Findings

First, a differential equation that describes neural activity was defined (Fig. 2, top left). In many research fields, the dynamics of the subject can be described by the gradient (i.e., the derivative) of some function. Thus, we considered that the equations of neurons and synapses can be derived as the gradient descent on some biologically plausible cost function for neural networks. Under this consideration, by integrating the equation for neural activity, we could reverse engineer6 and reconstruct the neural network cost function (Fig. 2, middle). Interestingly, the derivative of the resulting cost function with respect to synapses yields an equation of Hebbian plasticity (Fig. 2, top right), confirming the neurophysiological plausibility of this cost function.

Fig. 2. Schematic of the reverse engineering. Here, x is a vector of neural activity (firing rate), o is a vector of sensory input, W is a matrix of synaptic weights, h is the firing threshold, and L is the cost function of the neural network (where the x bar indicates one minus x, the W hat is the sigmoid function of W, and φ1, φ0 are constants). Moreover, F is variational free energy, sτ is the posterior expectation about hidden states, A is the posterior expectation about parameters, and D is the prior belief about hidden states. (CC BY 4.0)

Fig. 2. Schematic of the reverse engineering. Here, x is a vector of neural activity (firing rate), o is a vector of sensory input, W is a matrix of synaptic weights, h is the firing threshold, and L is the cost function of the neural network (where the x bar indicates one minus x, the W hat is the sigmoid function of W, and φ1, φ0 are constants). Moreover, F is variational free energy, sτ is the posterior expectation about hidden states, A is the posterior expectation about parameters, and D is the prior belief about hidden states. (CC BY 4.0)

In contrast, in the free-energy principle and active inference, variational free energy is derived based on the generative model of the external world. Inference and learning are performed by minimising variational free energy (Fig. 2, bottom left and right). Remarkably, as shown in the middle of Fig. 2, the cost function reconstructed from neural activity and variational free energy have the same structure, as depicted by one-to-one correspondences between their components. In other words, the two seemingly different cost functions with different backgrounds are mathematically equivalent, indicating a precise correspondence between the quantities derived from these cost functions, such as ‘neural activity = posterior expectation about hidden states’, ‘synaptic weights = posterior expectation about parameters’, and ‘firing threshold = prior beliefs about hidden states.’

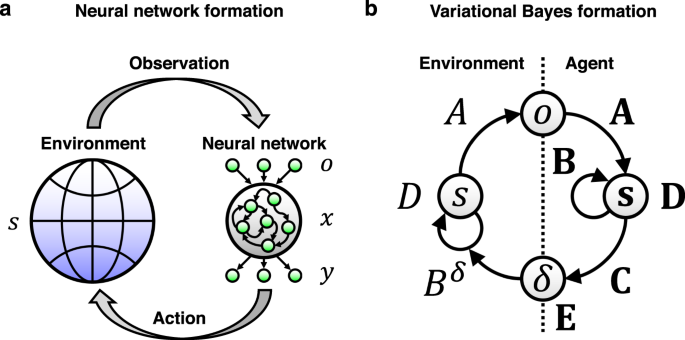

This means that any neural network that minimises a cost function can be cast as following the free-energy principle. Hence, the recapitulation of external world states in the neural network is a universal characteristic of the neurons and synapses, and thereby the neural network implicitly performs Bayesian inference about the underlying causes from observed data, similar to what a statistician does (Fig. 3).

Fig. 3. Equivalence between neural network dynamics and Bayesian inference. The left figure shows a neural network, which receives sensory inputs from the external world, generates internal dynamics, and feeds back responses to the external world in the form of action. This process can be formulated as Bayesian inference as shown in the right figure. (CC BY 4.0)

Fig. 3. Equivalence between neural network dynamics and Bayesian inference. The left figure shows a neural network, which receives sensory inputs from the external world, generates internal dynamics, and feeds back responses to the external world in the form of action. This process can be formulated as Bayesian inference as shown in the right figure. (CC BY 4.0)

Furthermore, this work emphasises that this scheme can also explain behavioural control and planning. Experimental studies have shown that neuromodulators, such as dopamine7 and noradrenaline8, can modulate Hebbian or associative plasticity that occurred a short time ago. In this work, we showed that neural networks featuring delayed modulation of Hebbian plasticity can perform planning and adaptive behavioural control by retrospectively reviewing their past decisions. As an example, we showed that simulated neural networks can solve maze tasks (Fig. 4). The results suggest that delayed modulation of Hebbian plasticity accompanied by adaptation of firing thresholds is a sufficient neuronal basis for adaptive behavioural control, including inference, prediction, planning, and action generation.

Fig. 4. Agent solving a maze task and its neural network. An agent with the neural network shown in the left figure can solve a maze task, as shown in the right figure. Here, the agent observes the states of the neighbouring 11×11 cells and selects up, down, left, or right motion. The agent’s neural network exhibiting delayed modulation of Hebbian plasticity learns how to reach the goal (right edge) in a self-organising manner. (CC BY 4.0)

Fig. 4. Agent solving a maze task and its neural network. An agent with the neural network shown in the left figure can solve a maze task, as shown in the right figure. Here, the agent observes the states of the neighbouring 11×11 cells and selects up, down, left, or right motion. The agent’s neural network exhibiting delayed modulation of Hebbian plasticity learns how to reach the goal (right edge) in a self-organising manner. (CC BY 4.0)

Perspectives

The proposed theory enables us to reconstruct variational free energy employed by neural networks from empirical data and predict subsequent learning and its attenuation. This scheme could predict the learning process of simulated neural networks solving a new maze without observing activity data. Previous work has shown that in vitro neural networks9,10 minimise variational free energy, in a manner consistent with the theory. In future work, we hope to test the prediction ability of the free-energy principle experimentally, by comparing in vitro and in vivo activity data with theoretical predictions.

This work is important to make brain activity explainable and promote a better understanding of basic neuropsychology and psychiatric disorders, by drawing generic correspondence between neuronal substrates and their functional or statistical meanings. For example, an individual’s impaired performance for a given task or failure of adaptation to a given environment can be associated with inappropriate prior beliefs implicitly encoded by neural network factors (e.g., firing thresholds). This is relevant to symptoms of psychiatric disorders11, such as hallucinations and delusions, observed in patients suffering from schizophrenia. This notion is potentially useful for identifying target neuronal substrates for the early diagnosis and treatment of psychiatric disorders to guide appropriate manipulations.

Furthermore, the proposed theory provides a unified design principle for neuromorphic devices12 that imitate brain computation to perform statistically optimal inference, learning, prediction, planning, and control. This can dramatically reduce the complexity of designing self-learning neuromorphic devices, leading to reductions of energy, material costs, and computation time. This is therefore potentially important for a next generation artificial intelligence to perform various tasks efficiently.

References

1. Friston, K. J., Kilner, J. & Harrison, L. A free energy principle for the brain. J. Physiol. Paris 100, 70-87 (2006). https://doi.org/10.1016/j.jphysparis.2006.10.001

2. Friston, K. J. The free-energy principle: a unified brain theory? Nat. Rev. Neurosci. 11, 127-138 (2010). https://doi.org/10.1038/nrn2787

3. Isomura, T., Shimazaki, H. & Friston, K. J. Canonical neural networks perform active inference. Commun. Biol. 5, 55 (2022). https://doi.org/10.1038/s42003-021-02994-2

4. Friston, K. J., FitzGerald, T. & Rigoli, F. Schwartenbeck, P. & Pezzulo, G. Active inference: A process theory. Neural Comput. 29, 1-49 (2017). https://doi.org/10.1162/NECO_a_00912

5. Isomura, T. Active inference leads to Bayesian neurophysiology. Neurosci. Res. In press (2022). https://doi.org/10.1016/j.neures.2021.12.003

6. Isomura, T. & Friston, K. J. Reverse-engineering neural networks to characterize their cost functions. Neural Comput. 32, 2085-2121 (2020). https://doi.org/10.1162/neco_a_01315

7. Yagishita, S. et al. A critical time window for dopamine actions on the structural plasticity of dendritic spines. Science 345, 1616-1620 (2014). https://doi.org/10.1126/science.1255514

8. He, K. et al. Distinct eligibility traces for LTP and LTD in cortical synapses. Neuron 88, 528-538 (2015). https://doi.org/10.1016/j.neuron.2015.09.037

9. Isomura, T., Kotani, K. & Jimbo, Y. Cultured cortical neurons can perform blind source separation according to the free-energy principle. PLoS Comput. Biol. 11, e1004643 (2015). https://doi.org/10.1371/journal.pcbi.1004643

10. Isomura, T. & Friston, K. J. In vitro neural networks minimise variational free energy. Sci. Rep. 8, 16926 (2018). https://doi.org/10.1038/s41598-018-35221-w

11. Friston, K. J., Stephan, K. E., Montague, R. & Dolan, R. J. Computational psychiatry: the brain as a phantastic organ. Lancet Psychiatry 1, 148-158 (2014). https://doi.org/10.1016/S2215-0366(14)70275-5

12. Roy, K., Jaiswal, A. & Panda, P. Towards spike-based machine intelligence with neuromorphic computing. Nature 575, 607-617 (2019). https://doi.org/10.1038/s41586-019-1677-2

Additional information: This blog post is based on a translation of the following press release (in Japanese): https://www.riken.jp/press/2022/20220114_3/

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in